2.3 How to predict the probability of subsequent blood donations?

Authors: Maciej Chylak, Mateusz Grzyb, Dawid Janus (Warsaw University of Technology)

2.3.1 Abstract

Blood donated for transfusions saves millions of lives every year. Reliable prediction of a patient’s blood donation willingness is valuable knowledge for the medical community. Because of that, a better understanding of the described problem is desirable. This work aims to achieve that by focusing on studying machine learning (ML) predictive models for such tasks through methods of explainable artificial intelligence (XAI). We consider an ML model based on a dataset containing information about patients’ blood donation history. Through prudent data pre-processing, model preparation, and the use of various XAI tools, we exhibit how strongly and in what way various features affect the model’s prediction. During our analysis, we uncover meaningful phenomena and patterns hidden in the data itself.

2.3.2 Introduction and motivation

Machine learning (ML) is a part of artificial intelligence that studies computer algorithms, which improve automatically through experience based on provided data. Nowadays, ML algorithms are commonly used for diverse tasks in many fields, including medicine. One of such applications is a reliable prediction of a patient’s blood donation willingness. It provides valuable knowledge for the medical community, allowing for better future blood supply estimation and an improvement of planning and advertising of blood donation campaigns.

ML algorithms learn to make predictions or decisions without being explicitly programmed to do so. It is both a blessing and a curse because, in the case of many models (often called black boxes for the following reason), even its designers cannot explain why such an AI solution has arrived at a specific conclusion. Here, methods of explainable artificial intelligence (XAI) come to the rescue. XAI facilitates humans to understand various artificial intelligence solutions (Barredo Arrieta et al. 2020a). Interest in XAI has increased significantly in recent years, and the intense development of such methods has led to the wide choice of XAI tools that we have today (Maksymiuk et al. 2021). It includes the R package DALEX (Przemyslaw Biecek 2018a), which is the foundation of this work.

Our goal is to prepare and explain a model designed to predict the probability of subsequent blood donations based on a history of a patient’s previous offerings. Careful use of XAI tools allows us to verify model correctness and discover phenomena and patterns hidden in the data. Through this, we aim to both improve understanding of the considered problem and find new ways of creating better prediction methods.

2.3.4 Data analysis and pre-processing

The data, which all the prepared models are based upon, comes from the Blood Transfusion Service Center Data Set, which is available through OpenML website (Vanschoren et al. 2013). The dataset consists of 748 observations (representing individual patients) described by 5 attributes:

- recency - months since last blood donation,

- frequency - total number of blood donations,

- monetary - total amount of donated blood in c.c.,

- time - months since first blood donation,

- donated - a binary variable representing whether she/he donated blood in March 2007.

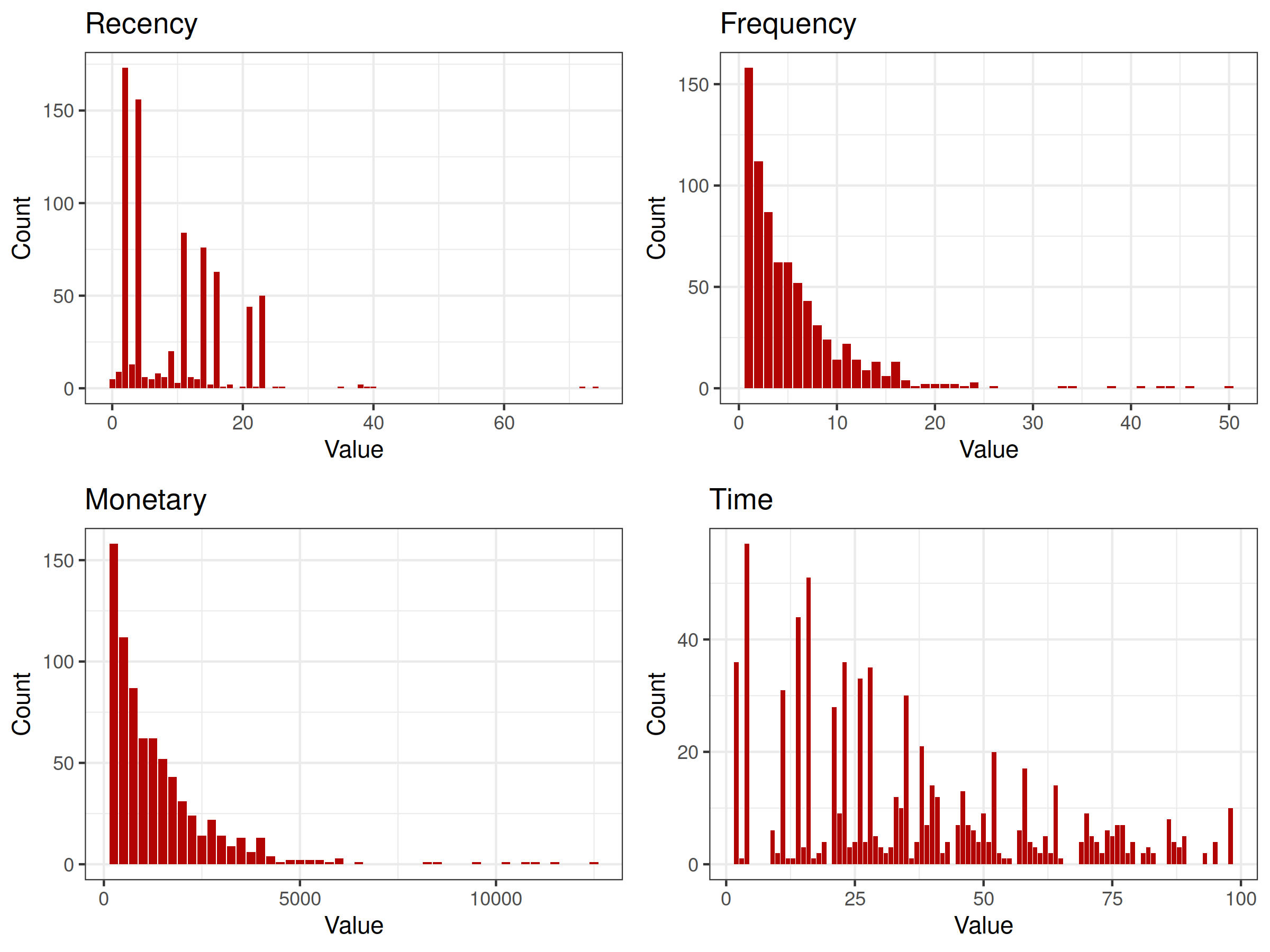

Initial data analysis is a critical process allowing to discover patterns and check assumptions about the data. It is performed with the help of summary statistics and graphical data representations. The short data analysis below is based on two visualizations representing distributions and correlations of variables.

Figure 2.32: distributions of explanatory variables (histogram). It is clearly visible that distributions of Frequency and Monetary variables are identical (excluding support).

Based on the above figure 2.32, an important insight can be made - distributions of Frequency and Monetary variables are identical (excluding support). It probably comes from the fact that during every donation the same amount of blood is drawn. The presence of both of these variables in the final model is pointless.

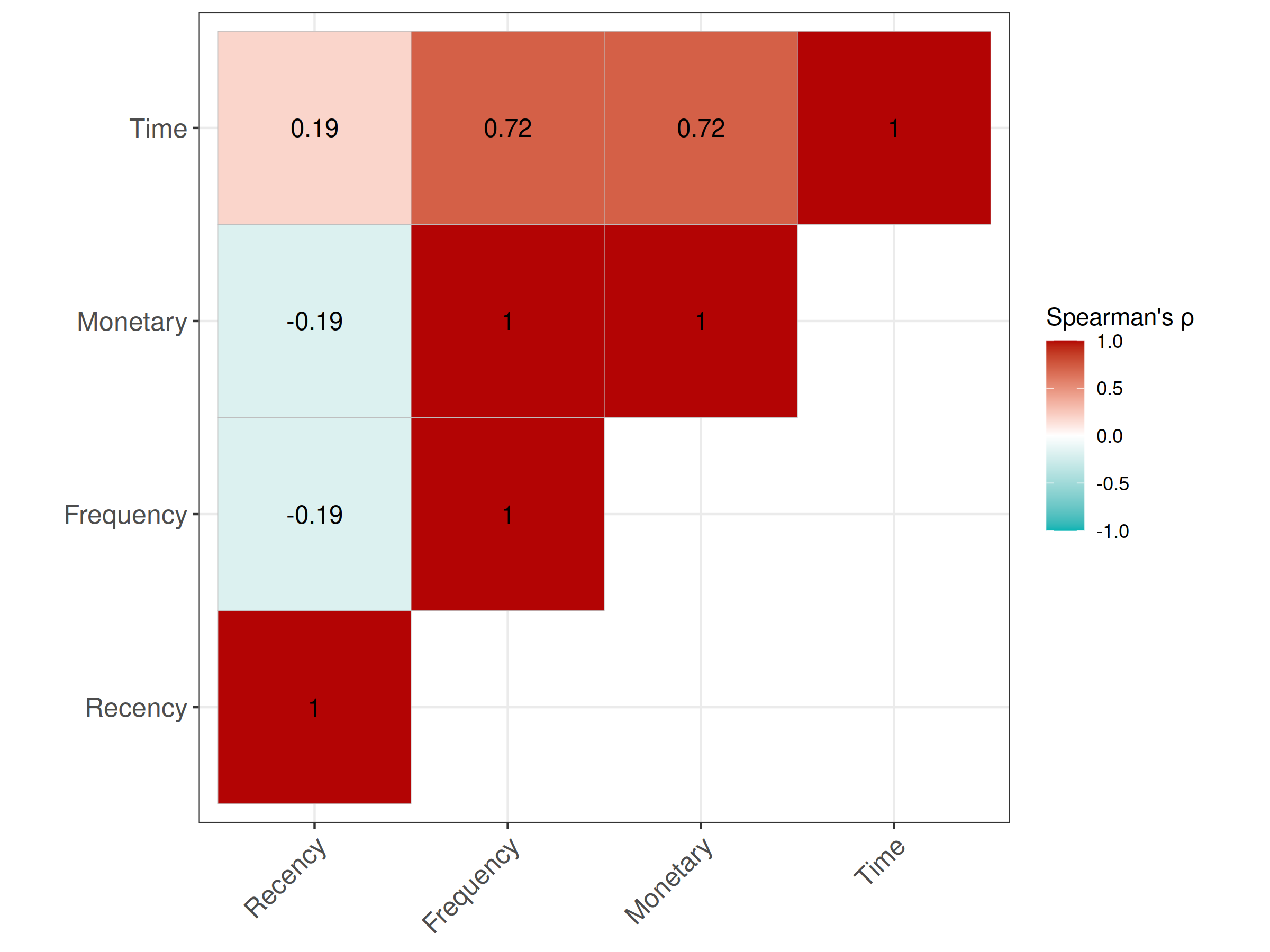

Figure 2.33: correlations of explanatory variables (correlation matrix). Frequency and Monetary variables are perfeclty correlated with each other and strongly correlated with the Time variable.

The above figure 2.33 represents correlations of explanatory variables measured using robust Spearman’s rank correlation coefficient. Apart from the already clear perfect correlation of Monetary and Frequency variables, a strong correlation of Time and Monetary/Frequency variables is visible. It probably comes from the fact that the minimal interval between subsequent donations is strictly controlled. Such dependence can negatively affect model performance and the accuracy of explanations. This potential problem is addressed during the pre-processing of used data.

A simple data pre-processing is conducted, mainly to reduce detected correlations of explanatory variables. Firstly, the variable Monetary is removed from the dataset. The information it carries duplicates information contained in the Frequency variable. Secondly, a derived variable is introduced instead of the Time variable. It is called Intensity and is calculated as follows:

\[\textrm{Intensity} = \frac{\textrm{Frequency}}{\textrm{Time}-\textrm{Recency}}\]

The above equation results in values between around 0.031 and 1. The denominator can be interpreted as a time window bounding all the known donations of a given patient. Spearman’s rank correlation coefficient of the new variable Intensity and the old variable Frequency is -0.46, which is better compared to the previous 0.72 value for the Time and Frequency combination.

2.3.5 Models preparation and selection

According to the OpenML website, Ranger implementation of Random Forests (Wright and Ziegler 2017b) is among the best performing classifiers for the considered task. All the tested models utilize this machine learning algorithm.

Performance of the models is assessed through three measures - basic classification accuracy and more complex area under the Receiver Operating Characteristic curve (ROC AUC) (Bradley 1997) (Fawcett 2006) and area under the Precision-Recall curve (PR AUC) (Raghavan et al. 1989) (Davis and Goadrich 2006). PR AUC is an especially adequate measure for unbalanced classification problems (Saito and Rehmsmeier 2015), which is the case here. Based on the described measures, the best model is chosen from the models trained on the following explanatory variables subsets:

- Recency, Time

- Recency, Frequency,

- Recency, Frequency, Time

- Recency, Frequency, Intensity

The first two models, utilizing only two explanatory variables, perform significantly worse. Out of the last two models, the model utilizing the Time variable is slightly worse than the model utilizing the introduced Intensity variable. The accuracy of the last model is 0.85, other performance measures describing it are presented graphically below:

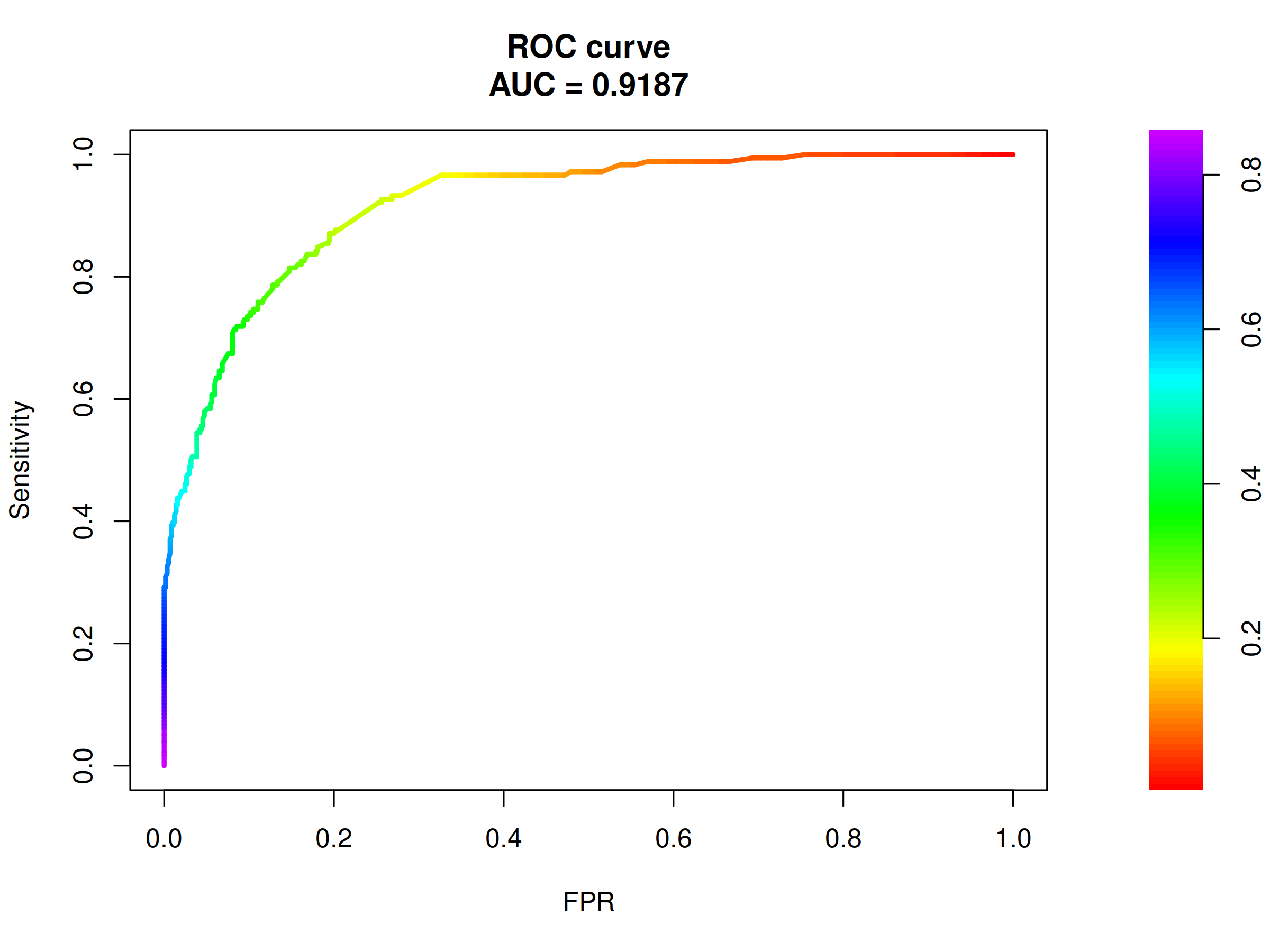

Figure 2.34: ROC curve and corresponding AUC for the selected model. ROC AUC of 0.92 is evidence of good model performance.

ROC curve visible in the above figure 2.34 represents good model performance and the AUC value of almost 0.92 is satisfactory.

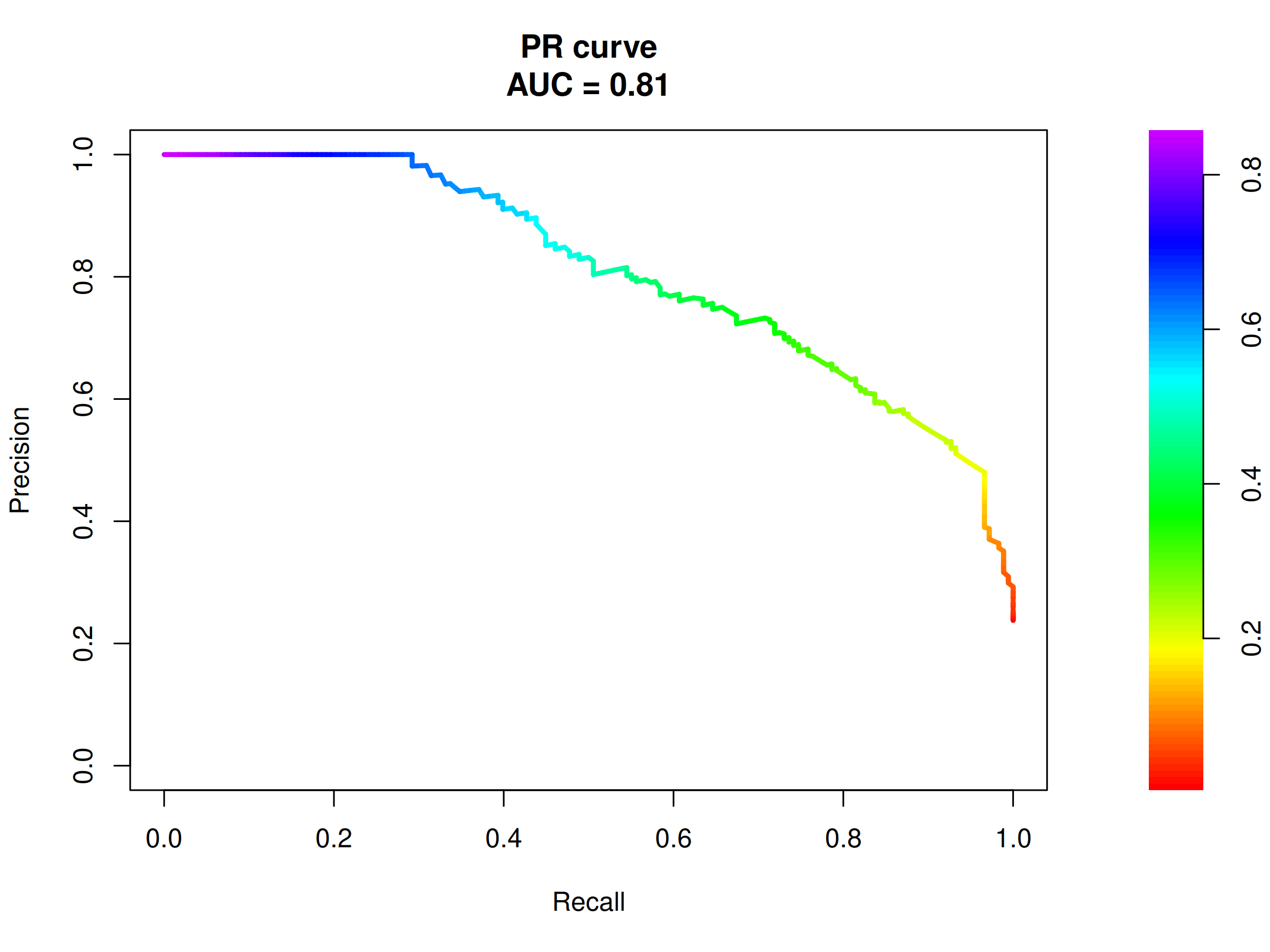

Figure 2.35: PR curve and corresponding AUC for the final model. PR AUC of 0.81 is proof of high model precision, as the baseline score here is 0.238.

The baseline for the ROC AUC is always 0.5, but it is not the case for the PR AUC. Here, the baseline AUC is equal to the proportion of positive observations in the data. In our case it is \(\frac{178}{748}=0.238\). Due to the above, the PR AUC value of around 0.81 visible in the figure 2.35 is also proof of high model precision.

Summarizing the above model selection, the final model used for all the presented explanations is Ranger implementation of Random Forests utilizing Recency, Frequency, and Intensity variables. Its performance measures are at least good, so the prepared explanations have a chance to be accurate.

2.3.6 Global explanations

Global explanations (Barredo Arrieta et al. 2020a) are used to discover how individual explanatory variables affect an average model prediction on the level of the whole dataset. Here, one can learn how strong is the influence of a given variable or how its particular values shape the overall model response. They are also useful for the evaluation of the model’s performance and its diagnostics.

In the case of large numbers of observations, global explanations can be prepared using only a representative fraction of them to save calculations time. Dataset presented in this work can be considered of modest size, and therefore all observations are always used.

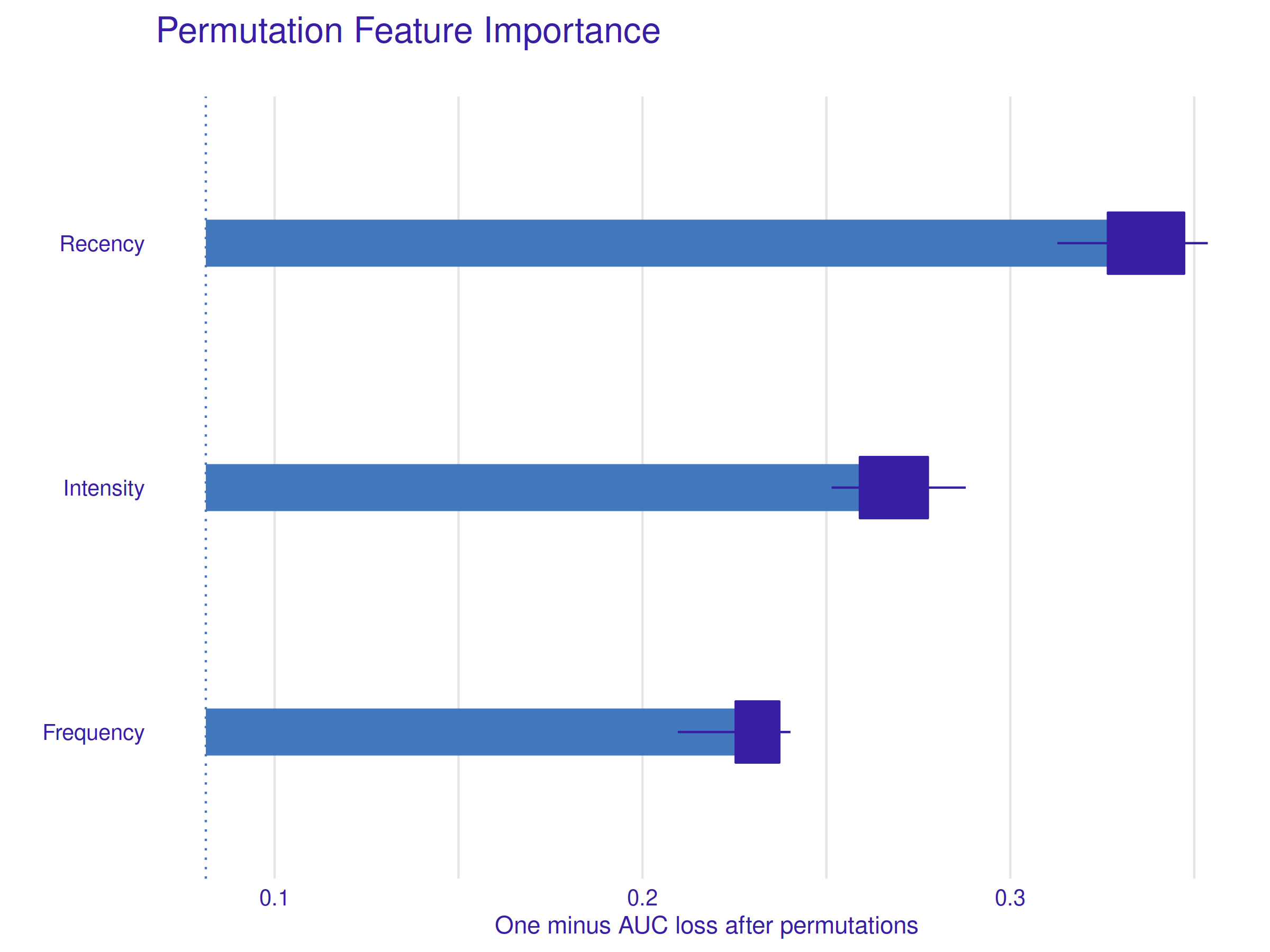

2.3.6.1 Permutation Feature Importance

Permutation Feature Importance (A. Fisher et al. 2018a) is a method of assessing how strongly a given variable affects the overall model prediction. The idea behind it is to measure a change in the model’s performance when it is not allowed to utilize a selected feature. Removal of variables from the model is realized through multiple random permutations of them.

Figure 2.36: Permutation Feature Importance Profile. The Recency variable is the most important one, followed by the Intensity variable and the least significant, but still important, Frequency variable.

Figure 2.36 shows, all explanatory variables have significant importance in the model under study. The most important feature is the Recency (highest loss after the permutations) followed by the derived variable Intensity and the Frequency in the last. The significance of the Recency variable is also well visible later on, especially when local explanations are considered.

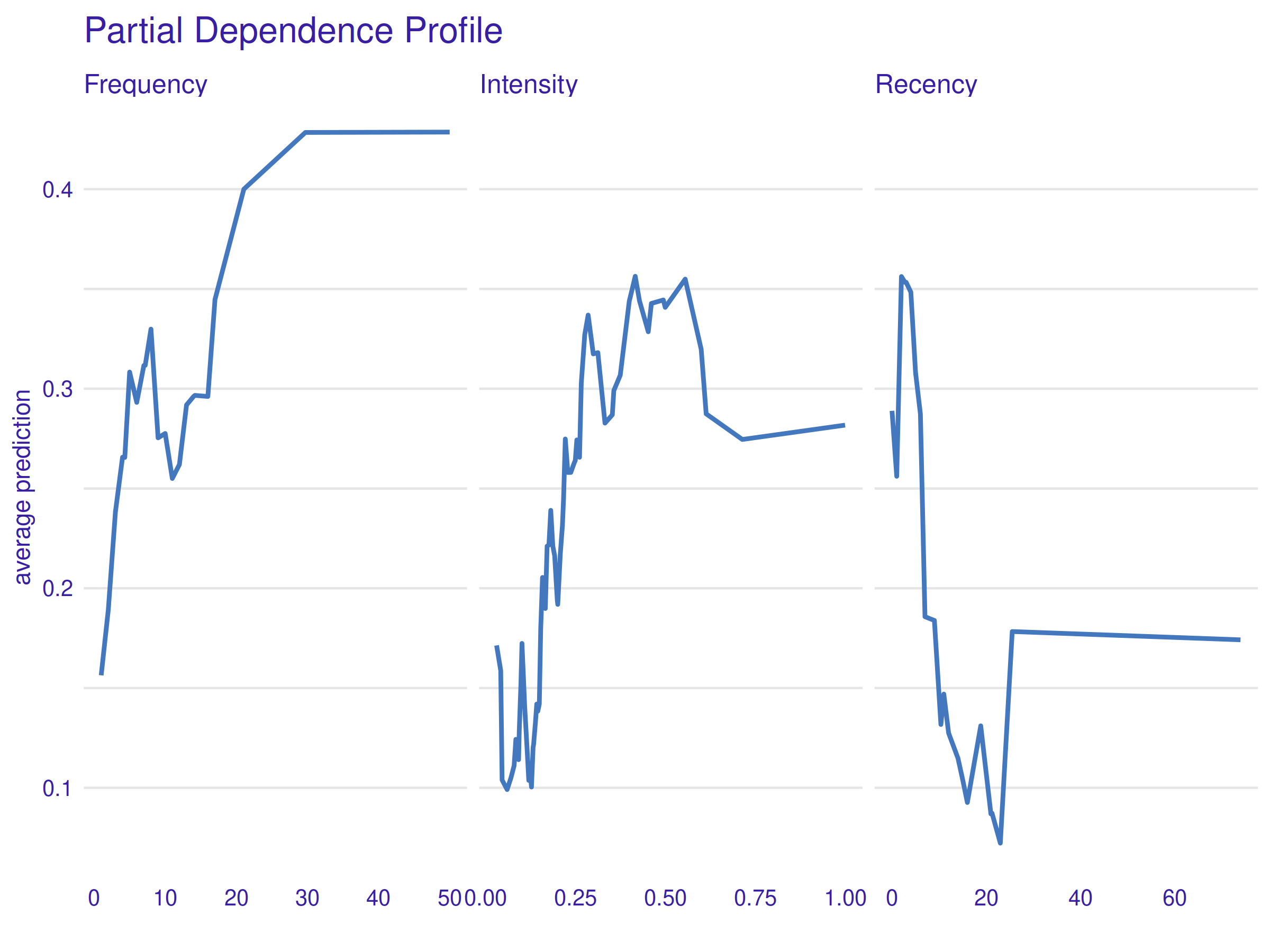

2.3.6.2 Partial Dependence Profile

Partial Dependence Profile (Friedman 2000c) (Brandon M. Greenwell 2017b) allows users to see how the expected model prediction behaves as a function of a given explanatory variable. It is realized through averaging (basic arithmetic mean) of multiple Ceteris-Paribus Profiles, a method described shortly in the Local Explanations section.

Figure 2.37: Partial Dependence Profile. The overall tendency is that higher Frequency and Intensity values affect the prediction positively, whereas the Recency variable effect is inverse. The irregularity of the curves and their odd tails are explained further in the text.

First of all, curves presented in the figure above 2.37 are visibly ragged. It is mostly caused by the selection of the machine learning algorithm - Random Forests contain many if-then rules that can change the final prediction value rapidly, even under small explanatory variable change. Another reason might be model overfitting, but because explaining the model is the primary goal, it is not a problem here, even if relevant. Therefore, it is best to look at the overall shape of yielded curves and ignore fine uneven details.

Visible shapes are largely in line with the intuition - higher Frequency and Intensity values lead to higher average prediction (probability of subsequent blood donation to recall), whereas the impact of Recency is inverse - the lower the value, the higher the prediction. However, some parts of the curves need further investigation - the tails. For the Frequency variable, the profile tail simply flattens, whereas for the other two variables there is a significant change in the mean prediction value. The explanation for this in the case of the Frequency and Recency variables is simple - an insufficient amount of data. There are only eight observations with \(\textrm{Frequency} > 30\) and only nine observations with \(\textrm{Recency} > 24\). It is a worthy reminder that XAI methods can be only as good as the data and the model themselves. Interestingly enough, the case of the sudden drop in the mean prediction as a function of the Intensity variable is much different. It is a genuine pattern contained in the data itself, that the model has learned to take an advantage of. The following table aids greatly in interpreting these results.

| Observations with Intensity > 0.55 (group A) | Observations with Intensity < 0.55 (group B) | |

|---|---|---|

| Total number of observations | 241 | 507 |

| Number of positive cases | 42 | 136 |

Table 2.2 shows, in group A there is 17 % of positive observations, and in group B there is 27 %. Using Fisher’s exact test (R. A. Fisher 1922) it can be shown that the difference in proportions between mentioned groups is statistically significant (p-value 0.0028). A probable hypothesis is that above a certain Intensity threshold there is an increased chance of a patient’s burnout caused by too frequent donations. This result has great implications, as forcing a slightly prolonged time interval between a given patient’s donations may decrease this risk and enlarge the total number of donations eventually.

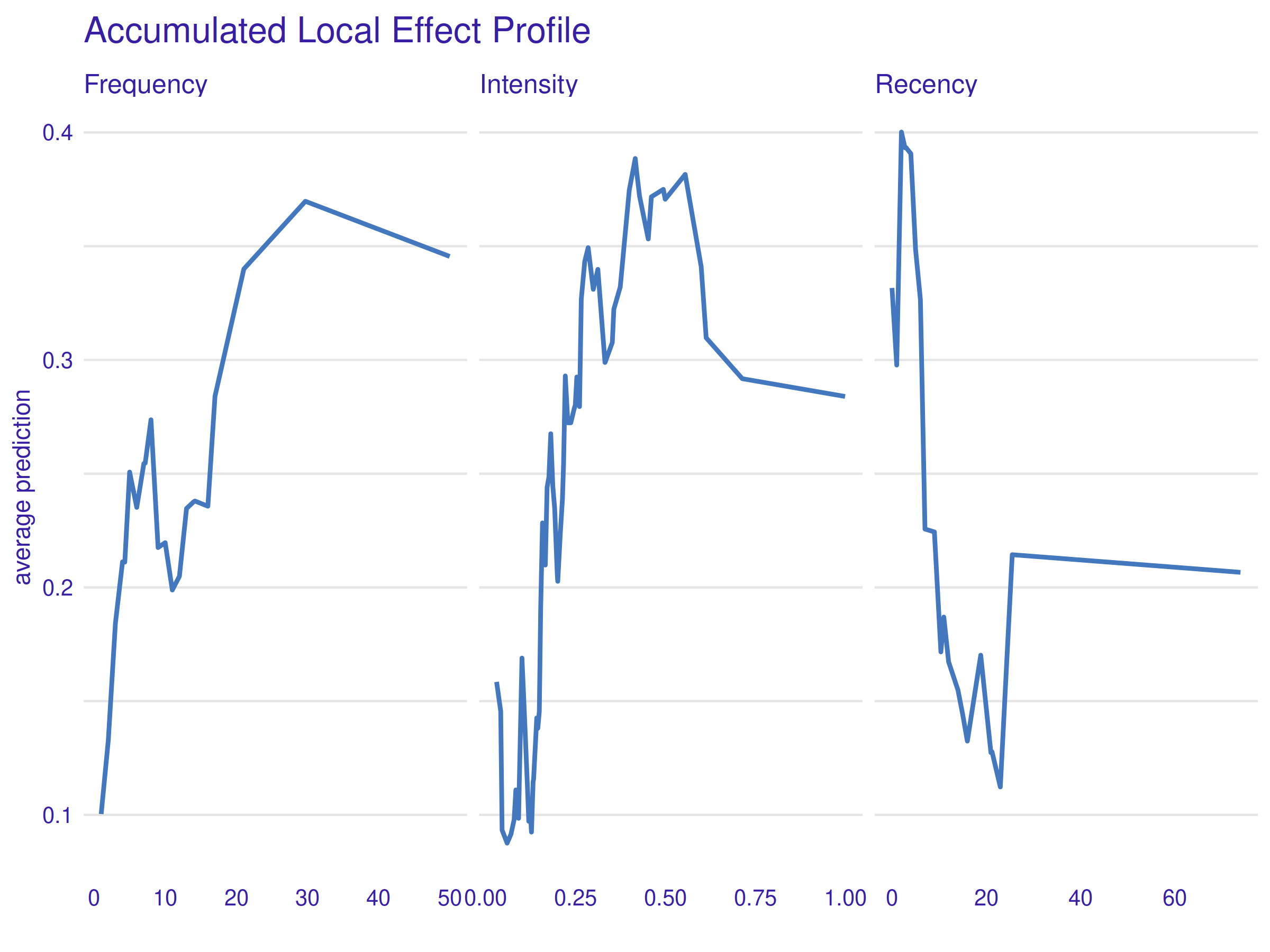

2.3.6.3 Accumulated Local Effect Profile

The method presented in the previous subsection - Partial Dependence Profile, can be misleading if explanatory variables are strongly correlated, which to some extent is still the case here, even after the pre-processing. Accumulated Local Effect Profile (D. Apley 2018) is another method used to explain a variable’s effect on the prediction, which is designed to address the mentioned issue.

Figure 2.38: Accumulated Local Effect Profile. The outcome of this method is very similar to the previous PDP plot.

Although this method addresses the very problem the used data has, no significant difference is visible in the figure 2.38 when compared to the previous figure 2.37. It only reassures that the conclusions presented in the previous subsection are correct.

2.3.7 Local explanations

Local explanations (Barredo Arrieta et al. 2020a) are relevant on a level of a single observation. They aid in understanding how strongly and in what way explanatory variables contribute to the model’s prediction when considering a single instance of data. It is a visible contrast when compared to the previously presented global explanations. However different, they can also help with model diagnostics by, for example, explaining what has pushed the model to a wrong decision.

| Number | Recency | Frequency | Intensity | Donated |

|---|---|---|---|---|

| 342 | 23 | 38 | 0.5067 | 0 |

| 16 | 2 | 5 | 0.5556 | 1 |

| 4 | 2 | 20 | 0.4651 | 1 |

| 109 | 2 | 3 | 0.2500 | 0 |

In the above table 2.3 a reference summary of all the observations used for preparing local explanations is presented.

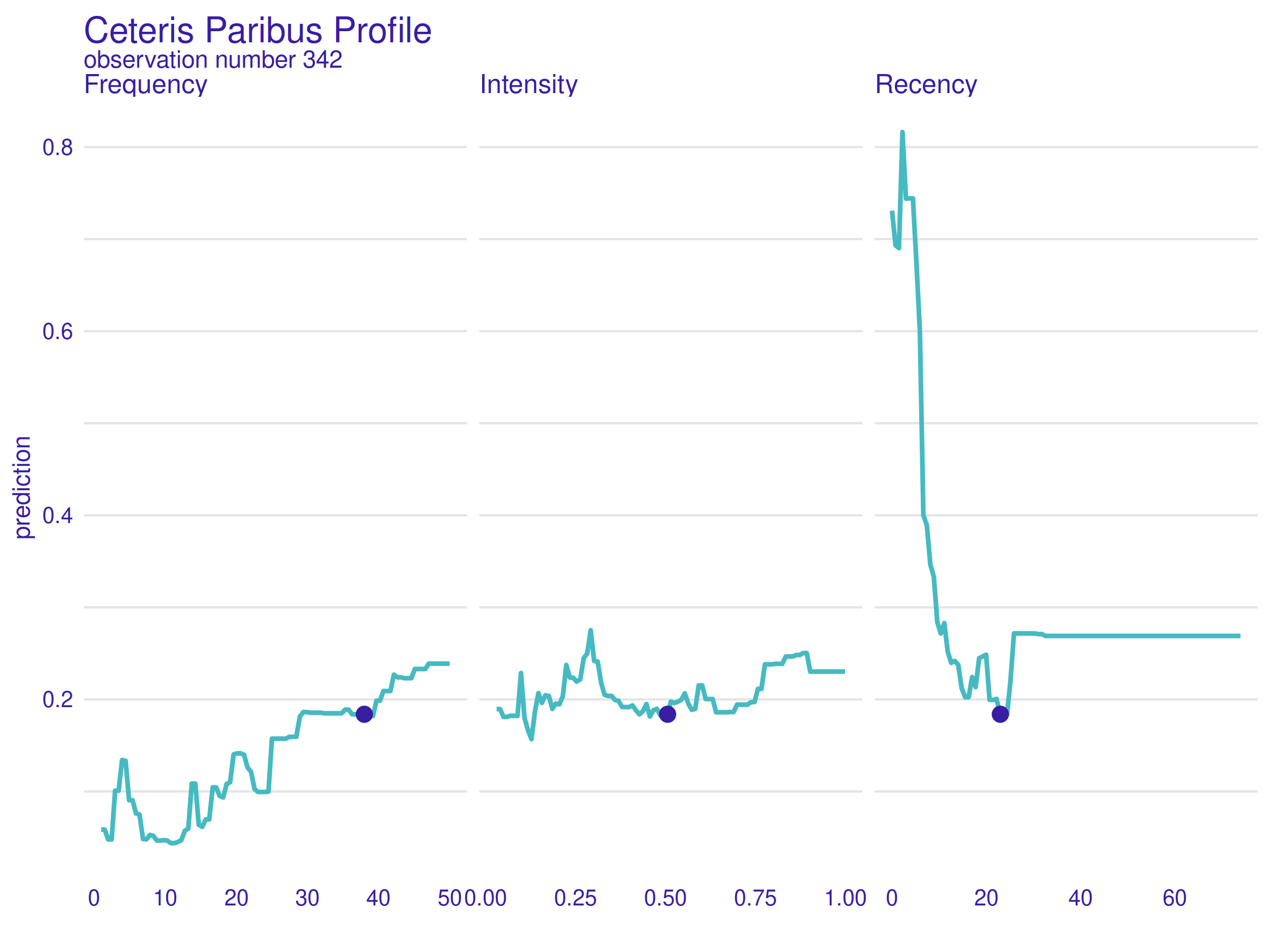

2.3.7.1 Ceteris Paribus Profiles

“Ceteris Paribus” is a Latin phrase that translates to “other things held constant” or “all else unchanged.” The idea behind this method is rather simple - for every variable, prediction is plotted as a function of its changing value while all other variables are held constant. Primary variables’ values come from a previously selected single observation and are marked with dots. This tool is also known as Individual Conditional Expectations (Goldstein et al. 2015).

Figure 2.39: Ceteris Paribus Profile for observation number 342. Because of low Frequency and Intensity values, only a lower Recency value would change the prediction significantly.

In the figure above 2.39, Ceteris Paribus Profile for observation number 342 is presented. This patient is characterized by high Frequency and Intensity values (which has already been shown positive in terms of the model’s prediction), but also a high Recency value (which on the contrary is considered a negative trait). It is worth noting that the model’s prediction for this observation is correct (she/he did not donate).

As the rightmost plot shows, the model’s prediction could be improved significantly only by lowering the Recency value. It is coincident with common sense - if someone was, at a time, an active donor but has not donated for almost two years, the chance of another donation seems low. Also, this local explanation complements the previously presented Permutation Feature Importance well - explanatory variable Recency is the most important one because it can negate the effect of positive values of both the other variables.

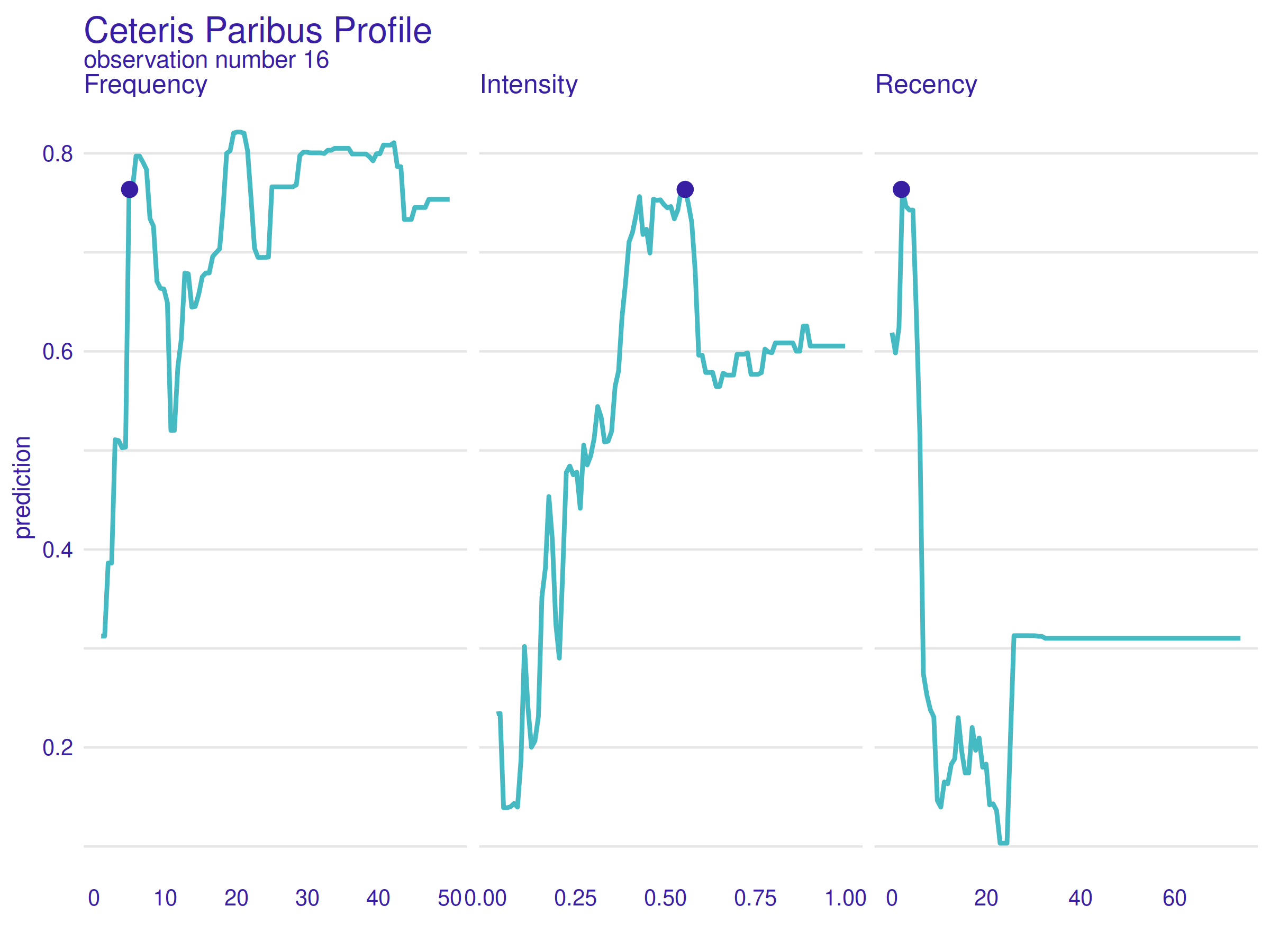

Figure 2.40: Ceteris Paribus Profile for observation number 16. Values of all the variables are nearly perfect for a positive prediction, but what is interesting is that the Intensity value any higher would result in a sudden and significant prediction drop.

In the figure above 2.40, Ceteris Paribus Profile for observation number 16 is presented. This patient is characterized by a moderate Frequency value, a high Intensity value and a low Recency value. It is worth noting that the model’s prediction for this observation is correct (she/he did donate).

What is interesting here is that the Intensity variable value is nearly perfect. Value even a little higher would raise the risk of the hypothetical donation burnout and lower the predicted donation probability. It is convincing proof that the described before patterns hidden in the data are expressed in the model as well and reveal themselves on a level of single observations.

2.3.7.2 Break Down Profiles

The last method presented aims to answer probably the most elementary question that can be asked when trying to explain the model’s behavior - what is the contribution of particular variables to the prediction for a single observation? Break Down Profile (Gosiewska and Biecek 2020) does it by evaluating changes in the mean model’s prediction when the values of consecutive variables are being fixed.

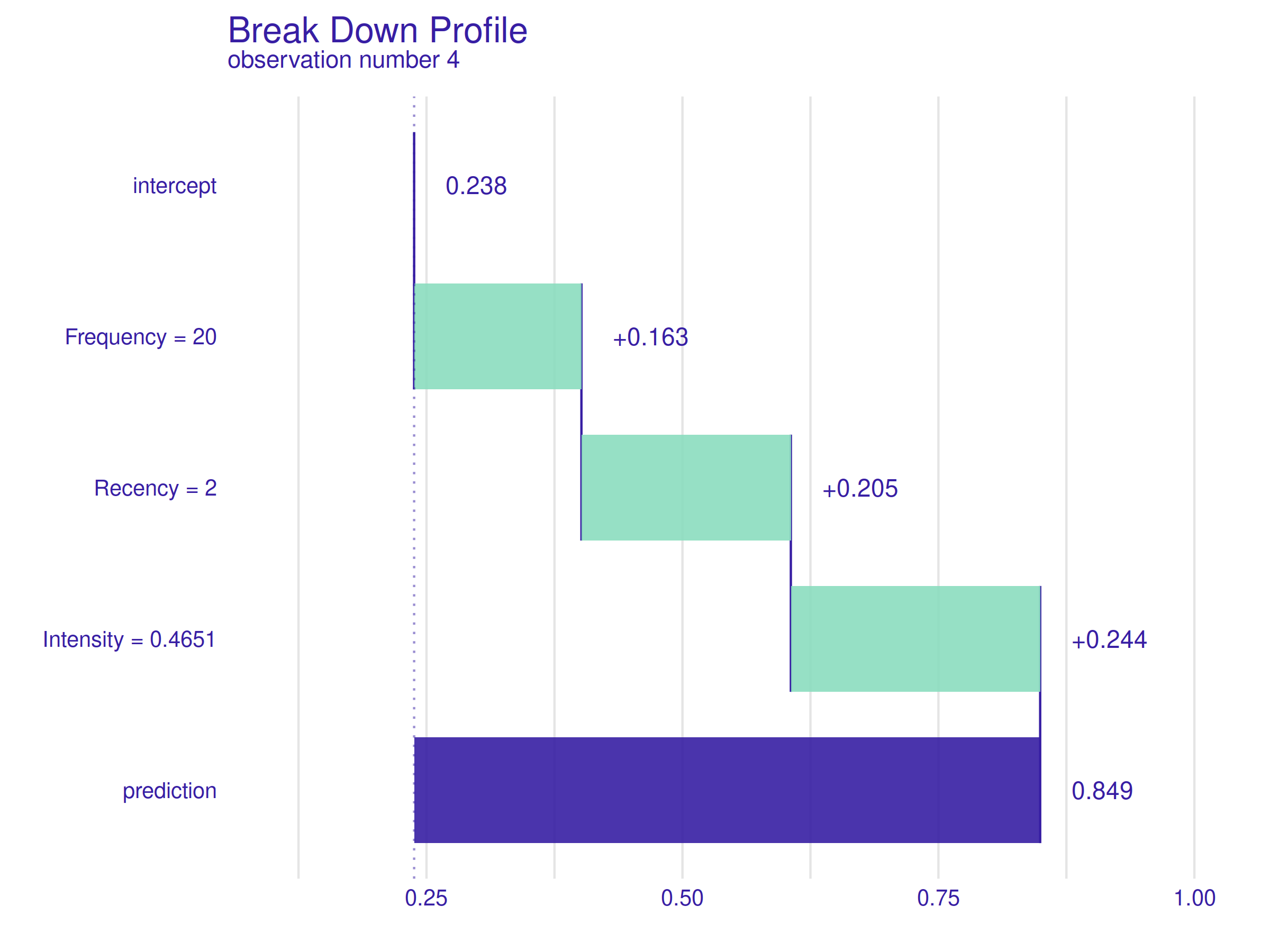

Figure 2.41: Break Down Profile for observation number 4. Values of all the variables have a similar positive effect on the prediction.

In the figure above 2.41, Break Down Profile for observation number 4 is presented. The patient’s characteristics are good and well balanced - twenty completed donations, only two months since the last donation, and an Intensity value of around 0.47, which is high but, as it has been shown already, not too high. It is worth noting that the model’s prediction for this observation is correct (she/he did donate).

The plot shows, the contributions of variables are all positive and well balanced as the values themselves. The result is not surprising but creates a good reference point for the following explanation.

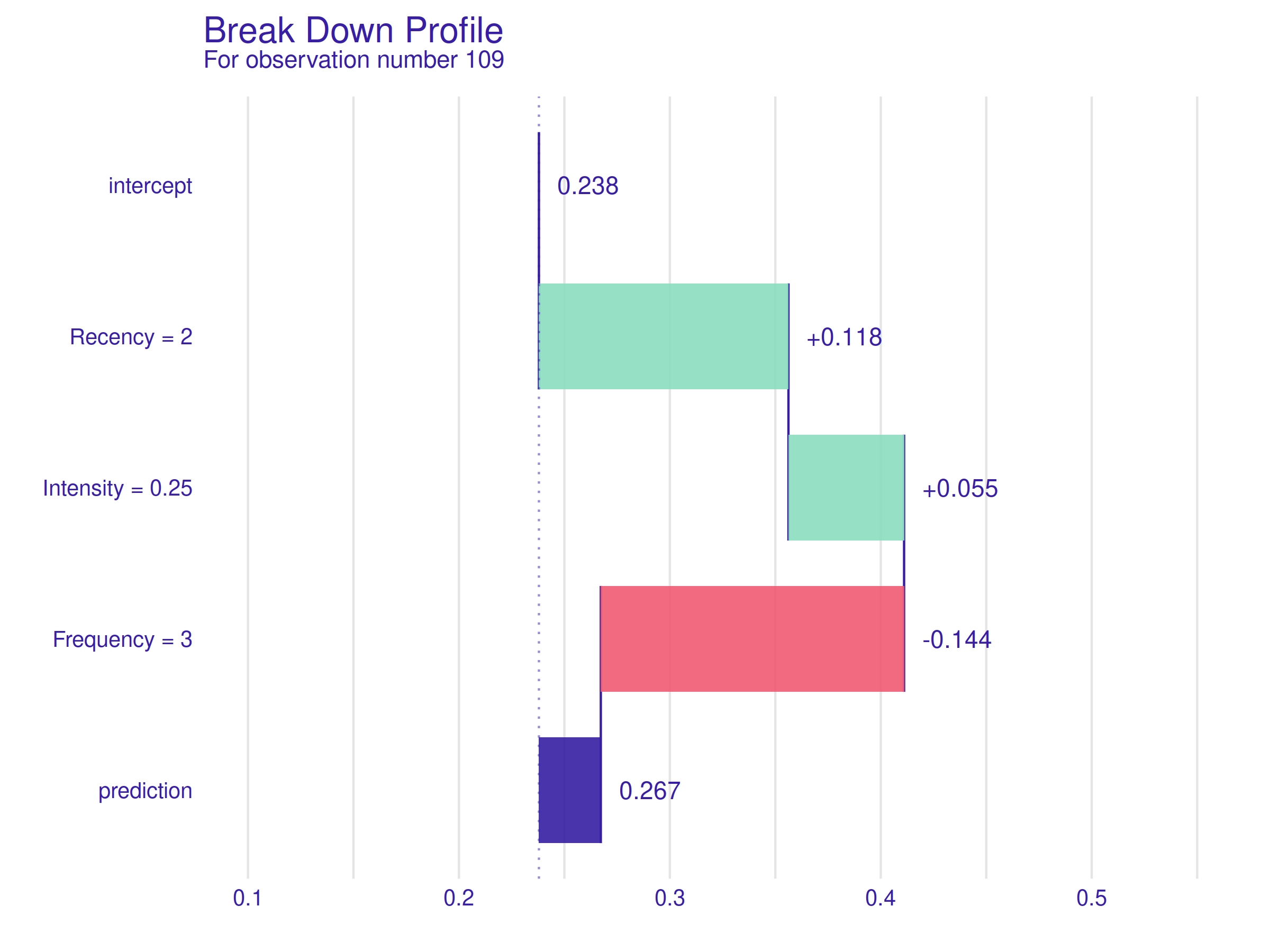

Figure 2.42: Break Down Profile for observation number 109. The low Recency value is once again a positive trait, but a low value of the Intensity variable is neutral for the prediction, and a negative impact of low Frequency value outweighs the previous two, resulting in a negative prediction.

In the figure above 2.42, Break Down Profile for observation number 109 is presented. This patient is chosen deliberately because of the same low Recency value as the previous example but much lower Intensity and Frequency values. It is worth noting that the model’s prediction for this observation is correct (she/he did not donate).

A Recency of two months still has a similar positive impact, but a mediocre Intensity value and a low Frequency change the prediction dramatically. When compared to the previous explanation, this plot is a perfect example of how important for positive prediction is that all variables’ values are well balanced. Previously (first Ceteris Paribus profile), it was visible that a high Recency value can negate the effect of positive values of the other two variables, but here it becomes clear that a low Recency value cannot make up for unfavorable values of the other two variables.

2.3.8 Conclusions and summary

The goal of this work has been achieved and the short answer to the title question - “How to predict the probability of subsequent blood donations?” - is as follows. The most important factor is whether the patient under consideration is active - when her/his last donation took place more than six months ago, the subsequent donation probability falls drastically, and other factors lose their importance. But when the Recency is low, other factors become decisive. A bigger number of past donations is almost always considered positive for the prediction, and higher values of the proposed Intensity feature are favorable only until a certain threshold (around 0.55) when a phenomenon of burnout probably becomes relevant.

Over the years, XAI has become a powerful tool that can produce numerous meaningful insights about the considered model and the data itself. With that said, it is best used with caution, as any statistical tool can yield false conclusions when its assumptions are not carefully checked. We believe that the purpose of our work has been fulfilled. We took care to prepare a precise model based on meaningful data to limit the uncertainty of the obtained model explanations. The same conclusions came up multiple times during the analysis of different explanations, which reassures their correctness. Some of the discovered phenomena are visible not only in the explanations but also in the data itself.