2.4 Various data imputation techniques in R

Authors: Jan Borowski, Filip Chrzuszcz, Piotr Fic (Warsaw University of Technology)

2.4.1 Abstract

There are many suggestions on how to deal with problem of missing values in data sets. Some solutions are offered in publicly available packages for the R language. In our study, we tried to compare the quality of different methods of data imputation and their impact on the performance of machine learning models. We scored different algorithms on various data sets imputed by chosen packages. Results summary presents packages which enabled to achieve the best models predictions metrics. Moreover, the duration of imputation was measured.

2.4.2 Introduction and motivation

2.4.2.2 Motivation

Various imputation techniques are implemented in different packages for the R language. Their performance is often analyzed independently and only in terms of imputation alone. Because of a variety of available tools, it becomes uncertain which one package and method to use, when a complete data set is needed for a machine learning model. In our study, we would like to examine, how methods offered by some popular packages perform on various data sets. We want to consider the flexibility of these packages to deal with different data sets. The most important issue for us is the impact of performed imputation on later machine learning model performance. We are going to consider one specific type of machine learning tasks: supervised binary classification. Our aim is a comparison of metrics scores achieved by various models depending on the chosen imputation method.

2.4.2.3 Definition of missing data

In the beginning, clarifying the definition of missing data is necessary. Missing data means, that one or more variables have no data values in observations. This can be caused by various reasons, which we can formally define as follows, referring to DONALD B. Rubin (1976):

- MCAR (Missing completely at random)

Values are missing completely at random if the events that lead to lack of value are independent both of observable variable and of unobservable parameters. The missing data are simply a random subset of the data. Analysis performed on MCAR data is unbiased. However, data are rarely MCAR. - MAR (Missing at random)

Missingness of the values can be fully explained by complete variables. In other words, missing data are not affected by their characteristic, but are related to some or all of the observed data. This is the most common assumption about missing data. - MNAR (Missing not at random)

When data are missing not at random, the missingness is related to the characteristic of the variable itself.

2.4.2.4 Techniques of dealing with missing data

In case of preparing a data set for machine learning models, we can generally distinguish two approaches. The first method is omission. From the data set, we can remove observations with at least one missing value or we can remove whole variables where missing values are present. This strategy is appropriate if the features are MCAR. However, it is frequently used also when this assumption is not met. It is also useless when the percentage of missing values is high. The second approach is imputation, where values are filled in the place of missing data. There are many methods of imputation, which we can divide into two groups. Single imputation techniques use the information of one variable with missing values. A popular method is filling missings with mean, median or mode of no missing values. More advanced are predictions from regression models which are applied on the mean and covariance matrix estimated by analysis of complete cases. The main disadvantage of single imputation is treating the imputed value as true value. This method does not take into account the uncertainty of the missing value prediction. For this reason multiple imputation was proposed. This method imputes k values, which leads to creating k complete data sets. The analysis or model is applied on each complete data set and finally, results are consolidated. This approach keeps the uncertainty about the range of values which the true value could have taken. Additionally, multiple imputation can be used in both cases of MCAR and MAR data.

2.4.3 Methodology

Experiment like this one can be performed involving many techniques we decide to divide our tests into 4 steps:

- Data Preparation,

- Data Imputation,

- Model Training,

- Model Evaluation.

Below we will explain every step in detail.

2.4.3.1 Data Preperation

For test purposes we used 14 data sets form OpenML library (Casalicchio et al. 2019). Every data set is designed for binary classification and most of them contain numerical and categorical features. Percentage of missing observations ranged from 0.7% to 35.8%. Most of the sets had a similar number of observations in both classes of target variable, but some of them were unbalanced. Before data imputation, each data set was prepared. Specific preparations were different for each data set, but we commonly did:

- Removing features which didn’t contain useful information for algorithms (for example all observation have the same value)

- Correcting typos and converting all strings to lower case to reduce the number of categories

- Converting date to more than one column (for example “2018-03-31” can be converted to three columns: year, month and day)

- Removing or converting columns with too many categories

After cleaning data sets were transferred to the next step.

2.4.3.2 Data Imputation

Clean data sets were split into two data sets, training and testing, in proportion \(1/4\) respectively. This split was performed randomly and only once for every data set. It means, every imputation technique used the same split. Imputation was performed separately for train and test sets. Before split, we also removed the target column to avoid using it in imputation. For our study, we decided to choose five packages designed for missing data imputation in the R language and one self-implemented basic technique:

- Mode and median: Simple technique of filling missing values with mode (for categorical variables) and median (for continuous variables) of complete values in a variable. Implemented with basic R language functions. Is a very simple method and it is used as a base result for more complex algorithms to compare.

- mice(van Buuren and Groothuis-Oudshoorn 2011b): Package allows to perform multivariate imputation by chained equations (MICE), which is a type of multiple imputation. The method is based on Fully Conditional Specification, where each incomplete variable is imputed by a separate model. Imputation method from mice package don’t require any form of help, because they can impute numeric and categorical features.

- missMDA(Josse and Husson 2016): Package for multiple missing values imputation. Data sets are imputed with the principal component method, regularized iterative FAMD algorithm (factorial analysis for mixed data). Firstly estimation of the number of dimensions for factorial analysis is essential.

- missFOREST(Stekhoven and Buehlmann 2012b): Package can be used for imputation with predictions of the random forest model, trained on complete observations. The package works on data with complex interactions and non-linear relations. Enables parallel calculations. Algorithm can be used on both numeric and categorical features and is capable of performing imputation without any help of other methods.

- softImpute(Hastie and Mazumder 2015b): Package for matrix imputation with nuclear-norm regularization. The algorithm works like EM, solving an optimization problem using a soft-thresholded SVD algorithm. Works only with continuous variables. SoftImpute package works only with numeric features. To compare it with other algorithms on the same data, we use softImpute for numeric variables and mode for categorical variables. Alternatively, it is possible to use softImpute for numeric features and different algorithms for categorical variables, but we decided that this approach may lead to unreliable results.

- VIM(Kowarik and Templ 2016c): Package for visualization and imputation of missing values. It offers iterative robust model-based imputation (IRMI). In each iteration, one variable is used as a response variable and the remaining variables as the regressors. This method additionally creates new columns with information whether the observation was imputed or not. We decided to do not use these columns, because other methods do not create them.

After imputation, we add back target variable to both sets. All methods work on the same parameters for all data sets. Not all methods were able to impute all 14 data sets. This fact will be taken into account in the subsequent analysis of the results.

2.4.3.3 Model traing

For classification task we use four classification algorithms:

- Extreme Gradient Boosting,

- Random Forest,

- Support Vector Machines,

- Logistic Regression

All methods were implemented in mlr package (Bischl, Lang, et al. 2016b). For hyperparameters tuning, we also used methods from the same package. For all data sets, four classifiers were trained and tuned on the same train sets. To select parameters we used Grid Search. For all algorithms selected parameters were tuned (more information about parameters in package description):

- XGB (etc, gamma, max_depth, subsample)

- Random Forest (num.trees, min.node.size)

- SVM (gamma)

- Logistic Regression (alpha, nlambda)

We will not focus on this part of the experiment. The most important part of this step is that every model training was carried out the same way. This means that differences in results can be caused only by the influence of previously used imputation technique.

2.4.3.4 Model evaluation

After previous steps, we have got trained models and test sets. In the final step we evaluate models and imputations methods. For every imputation and algorithm we calculate F1 score expressed by formula \(2\frac{(precision)\cdot(recall)}{precision + recall}\) and accuracy. We chose accuracy as the basic and most popular metric, and F1 which is a better metric for unbalanced sets. A detailed discussion about results in the next section.

2.4.4 Results

After the long and tedious process of data imputation, it is finally time to evaluate our methods of imputation. Methods were trained and tested on 14 data sets and evaluated strictly using 2 methods mentioned earlier. Before score analysis, it is worth mentioning that all algorithms were tested with optimal parameters, so the score should be quite meaningful. The Table 1 below presents average metrics achieved by models, depending on the imputation method. As described earlier, we decided to use two measures of algorithm effectiveness:

- F1 score

- Accuracy

These two measures complement each other well because they allow us to measure well the effectiveness of our imputations and algorithms, on both balanced and unbalanced sets.

| Method | Accuracy | F1 |

|---|---|---|

| softImpute | 0.86 | 0.75 |

| mice | 0.85 | 0.75 |

| missForest | 0.85 | 0.74 |

| VIM | 0.85 | 0.76 |

| median | 0.83 | 0.75 |

| missmda | 0.82 | 0.80 |

Our experiment did not find out the best imputation algorithm, but we can derive some interesting conclusions from it. First of all, we can think about the median/mode imputation as kind of baseline method. Surprisingly it seems to perform quite well, among the others, often quite sophisticated methods. ML models based on it achieved over 80% of accuracy on average. However, it is hard to decide whether it is a high score or not, because we are only able to compare algorithms between the others. To say anything more meaningful about our methods we shall look at the distribution of the scores to make a better analysis of performance.

2.4.4.0.1 Distributions of scores

Taking a brief look at the distributions of accuracy score (Figure 1), it seems quite unclear which algorithm performs the best. All medians seem to be approximately on the same level and also the first and third quartile, of almost all scores distributions, have the same value. Only missMDA is a bit lower than the others, but this is too early to derive any conclusions.

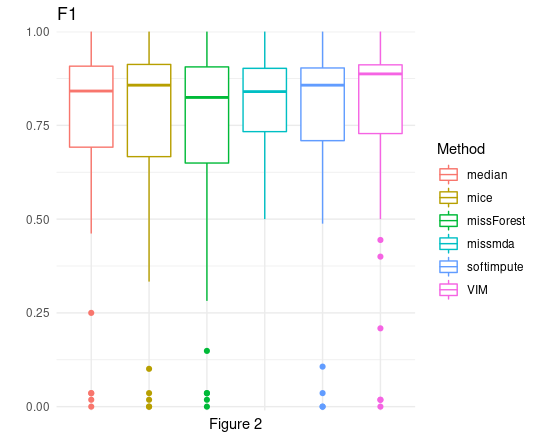

F1 scores (Figure 2) give us much more information. The first thing that becomes apparent after looking at that plot is the fact that we do have some very low values for every type of imputation. However, this is simply because some of our data sets were very small, saw neither of our algorithms was able to achieve recall above 0. Besides from that, once again scores of all algorithms were quite close to each other. It is also worth noting that not all algorithms managed to perform imputations on all data sets. For this reason, the analysis of only the means and distributions alone may not be fully objective. To create a fair comparison, we decided to use two different methods of comparing and classifying these algorithms, so that we will be able to choose our winner.

2.4.4.1 Median/mode baseline score

To begin with, we decided to treat the median/mode as the basic form of imputation and we will compare it to all other methods. As a single measure of models, we understand the average F1 score made by all models on the specific imputation result. As a measure of imputation method, we calculate difference between measure of models on its result to measure on mode/median result. Table below presents how much the average measure calculated for every imputation method differed from the median/mode.

| Method | score |

|---|---|

| softImpute | 0.02 |

| missForest | 0.01 |

| mice | -0.02 |

| missmda | -0.11 |

| VIM | -0.11 |

Results achieved here (Table 2) are quite surprising. First of all the maximum gain in F1 score is only 0.02 and it is achieved by softImpute. But what can bewilder the most is the fact that only 2 out of 5 algorithms managed to perform better than the median/mode. Score achieved by SoftImpute may be in some way caused by the fact, that it is using mode imputation for categorical variables. For some data sets its imputation becomes very similar to mode/median, if there are many more categorical variables than numeric ones. These scores can raise questions about the whole purpose of using sophisticated algorithms, but of course, we are unable to say anything harsh about them yet. We can definitely note that we need to be we careful while using these algorithms, because they may lead to huge loss in scores, even up to -0.11, as here it is shown with missMDA. It is worth noting the order of the algorithms here, as we will use another method to compare the scores.

2.4.4.2 Second approach

As a second approach, for each data set we decided to rank imputation methods according to the models scores, and then award points for each place. As a score of models for imputation method on the given data set, we understand as before the average F1 score made by all models on the imputation result. The best rank is 1 and it is given to the imputation method which had the highest average of models’ F1 scores. As a result, we sum up all of the points and the final score is the sum of the points across all data sets. Of course, the method with the lowest amount of points wins. This approach allows us to give the worse (highest) rank for the imputation methods which failed on a specific set and compare them fairly to others.

| Method | Score |

|---|---|

| softImpute | 38 |

| median | 39 |

| missForest | 48 |

| mice | 49 |

| VIM | 58 |

| missmda | 69 |

Scores achieved here (Table 3) resemble these achieved before. The order is not the same, but the winner remains the same. Once again median/mode imputation managed to perform very well, outperforming many complicated algorithms. We can say that our scores are stable, so conclusions taken from these scores can be taken seriously.

2.4.4.3 Times

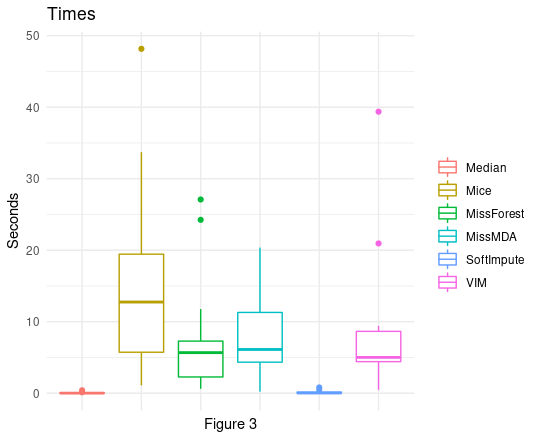

As a final tool of comparing algorithms, we decided to compare times of imputation. Obviously, even the algorithm with the best score, but with awful imputation time is considered useless, so this is important to take that factor to final evaluation.

As we can see (Figure 3) times of imputations made by each algorithm can vary heavily. There is no point in creating rankings like we did to analyze scores achieved by algorithms, but it is simply worth noting that our previous winner - softImpute is very quick, its plot looks the same as the median/mode plot, which is quite an achievement. On the other side, there is Mice, which times can reach very high values.

2.4.5 Summary and conclusions

Summing up all of our work we can say, that we managed to find plenty of interesting observations about all of these imputation algorithms and the way they deal with different data. However, it is hard to issue the final judgments, because we only had 14 data sets available. Despite that, we have several conclusions. First of all, it is worth noting how well simple methods of imputation have managed to perform. Median/mode imputation was a tough competitor and have been outperformed rarely in our tests. The second issue is the matter of choosing the optimal metric for checking the performance of imputation algorithms. We have shown 2 different approaches and scores they achieved differed slightly. Taking that into consideration we cannot say that we have a clear winner in our competition, but we can give some sort of advice for all interesting in imputing their data sets. The advice is quite simple, but what we have managed to show quite powerful. The case is to start imputing your data set with the most basic method, which is median/mode imputing, and then trying to beat its score with a different algorithm, for example softImpute, which in our tests managed to perform quite well.

References

Batista, Gustavo E. A. P. A., and Maria Carolina Monard. 2003. “An Analysis of Four Missing Data Treatment Methods for Supervised Learning.” Applied Artificial Intelligence 17 (5-6): 519–33. https://doi.org/10.1080/713827181.

Bischl, Bernd, Michel Lang, Lars Kotthoff, Julia Schiffner, Jakob Richter, Erich Studerus, Giuseppe Casalicchio, and Zachary M. 2016b. “Mlr: Machine Learning in R.” Journal of Machine Learning Research 17 (170): 1–5. http://jmlr.org/papers/v17/15-066.html.

Bono, Christine, L. Ried, Carole Kimberlin, and Bruce Vogel. 2007. “Missing Data on the Center for Epidemiologic Studies Depression Scale: A Comparison of 4 Imputation Techniques.” Research in Social & Administrative Pharmacy : RSAP 3 (April): 1–27. https://doi.org/10.1016/j.sapharm.2006.04.001.

Casalicchio, Giuseppe, Bernd Bischl, Dominik Kirchhoff, Michel Lang, Benjamin Hofner, Jakob Bossek, Pascal Kerschke, and Joaquin Vanschoren. 2019. OpenML: Open Machine Learning and Open Data Platform. https://CRAN.R-project.org/package=OpenML.

Hastie, Trevor, and Rahul Mazumder. 2015b. SoftImpute: Matrix Completion via Iterative Soft-Thresholded Svd. https://CRAN.R-project.org/package=softImpute.

Josse, Julie, and François Husson. 2016. “missMDA: A Package for Handling Missing Values in Multivariate Data Analysis.” Journal of Statistical Software 70 (1): 1–31. https://doi.org/10.18637/jss.v070.i01.

Kowarik, Alexander, and Matthias Templ. 2016c. “Imputation with the R Package VIM.” Journal of Statistical Software 74 (7): 1–16. https://doi.org/10.18637/jss.v074.i07.

Musil, Carol, Camille Warner, Piyanee Klainin-Yobas, and Susan Jones. 2002. “A Comparison of Imputation Techniques for Handling Missing Data.” Western Journal of Nursing Research 24 (December): 815–29. https://doi.org/10.1177/019394502762477004.

Rubin, DONALD B. 1976. “Inference and missing data.” Biometrika 63 (3): 581–92. https://doi.org/10.1093/biomet/63.3.581.

Stekhoven, Daniel J., and Peter Buehlmann. 2012a. “MissForest - Non-Parametric Missing Value Imputation for Mixed-Type Data.” Bioinformatics 28 (1): 112–18.

2012b. “MissForest - Non-Parametric Missing Value Imputation for Mixed-Type Data.” Bioinformatics 28 (1): 112–18.Su, Xiaoyuan, Taghi Khoshgoftaar, and Russ Greiner. 2008. “Using Imputation Techniques to Help Learn Accurate Classifiers.” Proceedings - International Conference on Tools with Artificial Intelligence, ICTAI 1 (December): 437–44. https://doi.org/10.1109/ICTAI.2008.60.

van Buuren, Stef, and Karin Groothuis-Oudshoorn. 2011b. “mice: Multivariate Imputation by Chained Equations in R.” Journal of Statistical Software 45 (3): 1–67. https://www.jstatsoft.org/v45/i03/.