2.1 Default imputation efficiency comparison

Authors: Jakub Pingielski, Paulina Przybyłek, Renata Rólkiewicz, Jakub Wiśniewski (Warsaw University of Technology)

2.1.1 Abstract

Imputation of missing values is one of the most common preprocessing steps when working with many real-life datasets. There are many ways to approach this task. In this article, we will be using 3 different simple imputation methods and 6 more sophisticated methods from popular R packages but without changing or tuning their parameters. We will test how their imputation affects the score of models trained on classification datasets. Effectiveness of the imputations will be checked by measuring models’ performance on different measures but focusing on one which is sensitive to class imbalance - Weighted TPR-TNR. We will try to answer the question of whether simple imputation methods are still insignificant for more complex methods with default parameters, or maybe in this case simple imputation methods are not only faster but more efficient?

2.1.2 Introduction and Motivation

Data is becoming more and more useful and valuable in various industries. Companies started to gather data on a scale that has not been imagined before. But with more data comes more problems. One of them is missing values. Omitting observations affected by them is one way, but why doing it while the information that they possess might be still valuable? This is why the imputation of missing values is essential when dealing with real-life data. But there are various methods of imputation and for an inexperienced user, it might be confusing and hard to decide which one to use. It may be even harder to design metrics that allow comparing methods between them. We decided that we should judge the effectiveness on the performance score of the model which has been trained on dataset imputed using some method. So when model trained on data imputed with \(method_1\) has a higher performance score than the same model trained on the same data but imputed with \(method_2\), that means that \(method_1\) is more effective.

Sometimes omitting rows or even removing columns and NA can give satisfying results but the majority of the time, it is worse than simple imputation methods. For simple tasks mean/median and mode are fast and computationally efficient and can give good results. But they have no variance and because of that can give worse results on complex datasets than more robust methods. On the other hand, more complex models like for example random forests are in theory more advanced and despite adding algorithmic complexity and computational costs to training procedure, are thought to be better in quality of imputation. So models that had been trained on data that has been previously imputed with more complex methods are more likely to achieve higher performance scores.

Furthermore, parameter changing and tuning can be difficult and time-consuming. Deciding which method is the best for an inexperienced user who will not be tuning hyperparameters is the purpose of this article. We are benchmarking methods that have different complexity from various packages along with simple imputation methods but without parameter tuning, so that casual users can benefit from it. The default methods from packages are easy to use and despite not living up to their potential they might acceptable results.

2.1.4 Methodology

In our article, we compare different methods of imputations - simple and advanced methods (from several R packages) - and measuring their efficiency on multiple models and classification datasets. This section of the article contains information about selected datasets, methods of imputations, ML algorithms, and metrics used to assess the quality of the classification after applying imputation to given datasets. Also, at the end of the section, the workflow of our experiment is briefly presented.

2.1.4.1 Datasets

In the experiment, we used various OpenML datasets, some of them belong to OpenML100 repository (Bischl, Casalicchio, et al. 2017). Datasets have been selected so that they have missing values and datasets differ in the number of observations, features, and percentage of data missing. All these datasets were designed for the classification task. The information about these datasets is given in Table 2.1.

| Dataset | Number of observations | Number of features | Imbalance ratio | Percent of missing values |

|---|---|---|---|---|

| cylinder-bands | 540 | 31 | 1.368 | 5.299 |

| credit-approval | 690 | 16 | 1.248 | 0.607 |

| adult | 5000 | 13 | 3.146 | 0.958 |

| eucalyptus | 736 | 16 | 1.307 | 3.864 |

| dresses-sales | 500 | 13 | 1.381 | 14.692 |

| colic | 368 | 20 | 1.706 | 16.291 |

| sick | 3772 | 28 | 15.329 | 2.171 |

| labor | 57 | 17 | 1.850 | 33.643 |

| hepatitis | 155 | 20 | 3.844 | 5.387 |

| vote | 435 | 17 | 1.589 | 5.301 |

| echoMonths | 130 | 10 | 1.031 | 7.462 |

Imbalance ratio is computed as follows \(imbalance\_{ratio} = n/p\) where \(n\) - negative class and \(p\) - positive class.

2.1.4.2 Imputing strategies

We selected and analyzed nine different imputation strategies that are known and willingly used by programmers. Some of them belong to simple methods of imputation and others are more complex. A description of these methods is below.

- remove columns - removing columns with any missing values from the dataset.

- random fill - imputing with random values from the given feature.

- median and mode - imputing with median (for numerical features) and mode (for categorical features) from the given feature.

- missForest - imputing with Random Forests (using missForest package (Stekhoven and Buehlmann 2012a)). Function from this package builds a random forest model for each feature with missing values. Then it uses the model to predict the value of missing instances in the feature based on other features.

- vim knn - imputing with k-Nearest Neighbor Imputation based on a variation of the Gower Distance (using VIM package (Kowarik and Templ 2016a)). An aggregation of the k values of the nearest neighbors is used as an imputed value.

- vim hotdeck - imputing with sequential, random (within a domain) hot-deck algorithm (using VIM package). In this method, the dataset is sorted and missing values are imputed sequentially running through the dataset line (observation) by line (observation).

- vim irmi - imputing with Iterative Robust Model-Based Imputation (IRMI) (using VIM package). In each step of the iteration (inner loop), one variable is used as a response variable and the remaining variables serve as the regressors. The procedure is repeated until the algorithm converges (outer loop).

- pmm (mice) - imputing with Fully Conditional Specification and predictive mean matching (using mice package (van Buuren and Groothuis-Oudshoorn 2011a)). For each observation in a feature with missing values, this method finds observation (from available values) with the closest predictive mean to that feature. The observed value from this “match” is then used as an imputed value.

- cart (mice) - imputing with Fully Conditional Specification and classification and regression trees (using mice package). CART models select a feature and find a split that achieves the most information gain. Splitting process is repeated until the maximum height is reached or branches have only samples from one class.

The first three methods will be called simple imputation methods. They are self-explanatory and do not have any hyperparameters to tune. In contrast, algorithms provided in missForest, VIM, and mice packages are complex and may require costly hyperparameters tuning. We used default implementations in our experiments, based on the assumption that additional complexity and more hyperparameters might create a too steep learning curve for an average data scientist.

Since neither mlr nor caret package enables using custom imputing methods in modeling pipeline, the only way to avoid data leakage was to impute values separately for train and test datasets. This imposes a severe limitation on our imputation effectiveness, especially for small datasets, since information learned during imputing values in training data set cannot be used during test set imputation.

2.1.4.3 Classification algorithms

All models were based on classifiers from the caret package (Kuhn 2008) with AUC being the summary metric that was used to select the optimal model. Our goal was to use classification models that are as different from each other as possible, to check if model complexity and flexibility have an impact on the effectiveness of imputation strategy. The most effective imputation strategy should enable models to achieve the highest performance scores. Selected classification algorithms are listed below.

- Bagged CART - simple classification trees with bootstrap aggregation. treebag builds multiple models from different subsets of data. At the end constructs a final aggregated and more accurate (according to the chosen metric) model.

- Random Forest - very flexible, robust, and popular algorithm, with no assumptions about data. Multiple classification trees trained on bagged data. For this article implementation called ranger will be used because of its lower computing time leverage.

- Multivariate Adaptive Regression Spline - assumes that relation between features and response can be expressed by combining two linear models at a certain cutoff point - can be regarded as a model with flexibility between linear and nonlinear models. Implementation called earth will be used.

- k-Nearest Neighbors - assumes that similar observations are close to each other in feature space, assumes a very local impact of features. knn is a simple algorithm and will be a good benchmark for others.

- Naive Bayes - nonlinear model with the assumption that all features are independent of each other. Pros of nb are: fast in training, easily interpretable, has little hyperparameters to tune.

While some of these algorithms have multiple hyperparameters to tune it will not be covered in this article. Tuning for different data can be time-consuming especially when datasets are large and tuning them could mitigate the effect of data imputation. Based on this factor default implementations and parameters of those algorithms will be used. It might not get the best score in metrics but it will be fair for all the imputation methods. It can be argued that the impact of imputation will be even more visible without parameter tuning. All algorithms will be trained and tested on the same data but some will be encoded.

2.1.4.4 Metrics

We performed the aforementioned imputing methods for 11 datasets from the OpenML repository. Since all datasets were designed for binary classification problems and many of them were heavily imbalanced, we used 4 metrics to evaluate our models’ performance (see Table below).

| Measure | Formula |

|---|---|

| AUC | Measures area under plot of Sensitivity against Specificity |

| Balanced Accuracy | \(\frac{1}{2}(Sensitivity+Specificity)\) |

| Geometric Mean | \(\sqrt{Sensitivity*Specificity}\) |

| Weighted TPR-TNR | \((Sensitivity*\frac{N}{P+N})+(Specificity*\frac{P}{P+N})\) |

Area under ROC curve (AUC) is used as a general performance metrics, while the rest is used to specifically evaluate model performance on imbalanced data sets.

Accuracy measures how a good model is incorrectly predicting both positive and negative cases. If your dataset is imbalanced, then “regular” accuracy may not be enough (cost of misclassification of minority class instance is higher than for majority class instance). That is why we use Balanced Accuracy, which is the arithmetic mean of the TPR (Sensitivity) and FPR (Specificity).

Geometric Mean (of Sensitivity and Specificity) is a metric that measures the balance between classification performances on both the majority and minority classes.

Weighted TPR-TNR is a very promising new single-valued measure for classification. It takes into account the imbalance ratio of the dataset and assigns different weights to the TPR and TNR (where P is the total number of positive cases and N is the total number of negative cases).

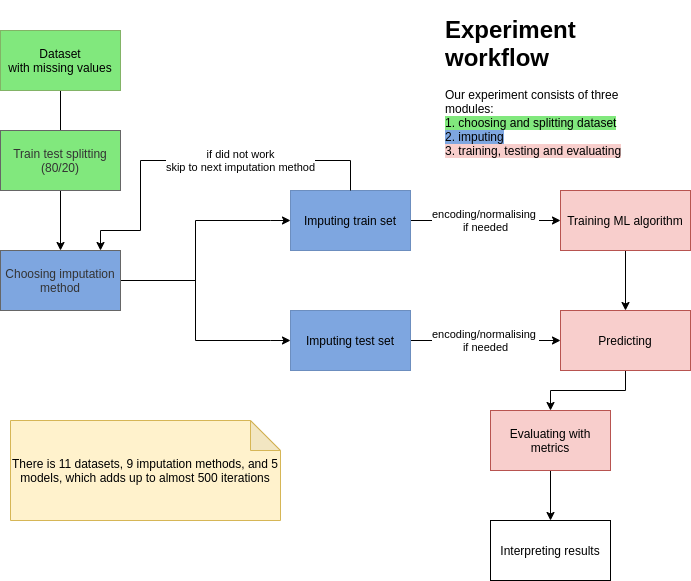

2.1.4.5 Experiment

To sum up, in the experiment we used 5 different classification algorithms and 11 different datasets to assess the performance of the imputation of missing values using the previously described measures. The experiment workflow was presented in Figure 2.1.

FIGURE 2.1: Experiment workflow

The caret package in R programming was used to conduct the experiment. Classification algorithms used in this experiment were: ranger, earth, treebag, knn, nb existing in function train() from this package.

The procedure followed to assess the performance of the imputation of missing values was as follows:

- The dataset was divided into train and test subsets with a split ratio of 80/20.

- We imputed values separately in train and test datasets. This process was repeated for all nine different imputing strategies. The time of imputation was measured independently outside this workflow.

- All imputed train and test datasets were used in five classification algorithms.

- Numerical variables were centered and scaled to mean 0 and standard deviation 1. For knn datasets were also encoded with one-hot encoding.

- The classifiers were built using transformed train datasets.

- The classification results were obtained using a transformed test datasets.

Before dividing into training and test subsets and building classifiers, seed equal to one was set.

2.1.5 Results

Before analyzing the results, three caveats:

- some of our datasets were quite small, with 100 or so observations

- some of our datasets were heavily imbalanced

- some imputations methods did not cover or generally impute data due to the specificity of the dataset. These methods are:

remove columns 3/11 failed (labor, vote, and colic datasets)

pmm (mice) and cart (mice) 3/11 failed (cylinder-bands, credit-approval, and labor datasets)

vim irmi 5/11 failed (all of the above)

For removing columns explanation is simple. In some datasets, missing values are in every column so after removing all columns model has no data to be trained on. For other methods, it is more complicated to explain. Apart from removing columns methods they did not throw an error and tried to imput the data but without success.

While analyzing the results we are looking at two things:

- for which imputation method the metrics have the best results

- time of imputation

Especially for big datasets, sophisticated imputation methods required many hours or even days of computing. For that reason datasets with tens of thousands of observations were truncated to 5000 (refers to the adult dataset).

2.1.5.1 Imputation results

2.1.5.1.1 Mean metrics

With this first look at the results of imputations performance metric scores will be taken into consideration. In Table 2.2 was presented this performance metrics ordered by Weighted TPR-TNR. Note that in this experiment methods that failed to impute data will be omitted so the whole picture of efficiency among imputation metrics might be distorted. This issue will be addressed in the next section.

| Imputation name | AUC | BACC | GM | Weighted TPR-TNR |

|---|---|---|---|---|

| random fill | 0.8717 | 0.8037 | 0.7900 | 0.7687 |

| median and mode | 0.8670 | 0.7931 | 0.7783 | 0.7516 |

| vim hotdeck | 0.8534 | 0.7839 | 0.7701 | 0.7486 |

| missForest | 0.8515 | 0.7879 | 0.7611 | 0.7453 |

| vim knn | 0.8667 | 0.7771 | 0.7563 | 0.7445 |

| cart (mice) | 0.8581 | 0.7884 | 0.7702 | 0.7379 |

| pmm (mice) | 0.8568 | 0.7847 | 0.7678 | 0.7360 |

| vim irmi | 0.8220 | 0.7474 | 0.7215 | 0.6812 |

| remove columns | 0.7648 | 0.6623 | 0.5541 | 0.5523 |

As seen above random fill is the best imputation method in all metrics. Second in place in all metrics was median and mode. cart (mice) was third 2 times (GM and BACC) and vim knn with vim hotdeck were third each one time. Simple imputation methods were superior for these datasets. The worst idea seems to be removing columns and using irmi.

2.1.5.1.2 Ranking of results

Each model has its specific features and might be thriving after applying different imputation methods. Seeing which method was the best (and the worst) for each model should give a more wide perspective. For creating ranking only Weighted TPR-TNR will be taken into consideration. This is because this metric takes into account the imbalance ratio of the datasets and thanks to its equal evaluation for all datasets will be ensured. Rank feature here is average of ranks from all datasets so if some imputation was second in the metric it would be given rank 2. If two imputations had the same values in the metric they would both have the same rank. If the imputation method failed to impute it was assigned the last rank. Top 3 best imputations was presented in Table 2.3.

| Model name | 1th method imputation | 2th method imputation | 3th method imputation |

|---|---|---|---|

| ranger | vim knn | missForest | pmm (mice) |

| earth | random fill | median and mode | cart (mice) |

| treebag | random fill | cart (mice) | median and mode |

| knn | missForest | random fill | vim knn |

| nb | random fill | median and mode | missForest |

Random fill is the best in 3 out of 5 models. For ranger and knn the best are VIM knn and missForest respectively. In our top 3 ranking also appears median and mode and two mice methods - pmm and cart.

Now let’s see the top 3 worst imputations in Table 2.4.

| Model name | 7th method imputation | 8th method imputation | 9th method imputation |

|---|---|---|---|

| ranger | vim hotdeck | vim irmi | remove columns |

| earth | pmm (mice) | vim irmi | remove columns |

| treebag | vim knn | vim irmi | remove columns |

| knn | vim irmi | pmm (mice) | remove columns |

| nb | cart (mice) | vim irmi | remove columns |

Removing columns is the worst choice when imputing data, which is not surprising. It removes vital information from data resulting in significantly worse performance. Second, from the end is almost for all models vim irmi.

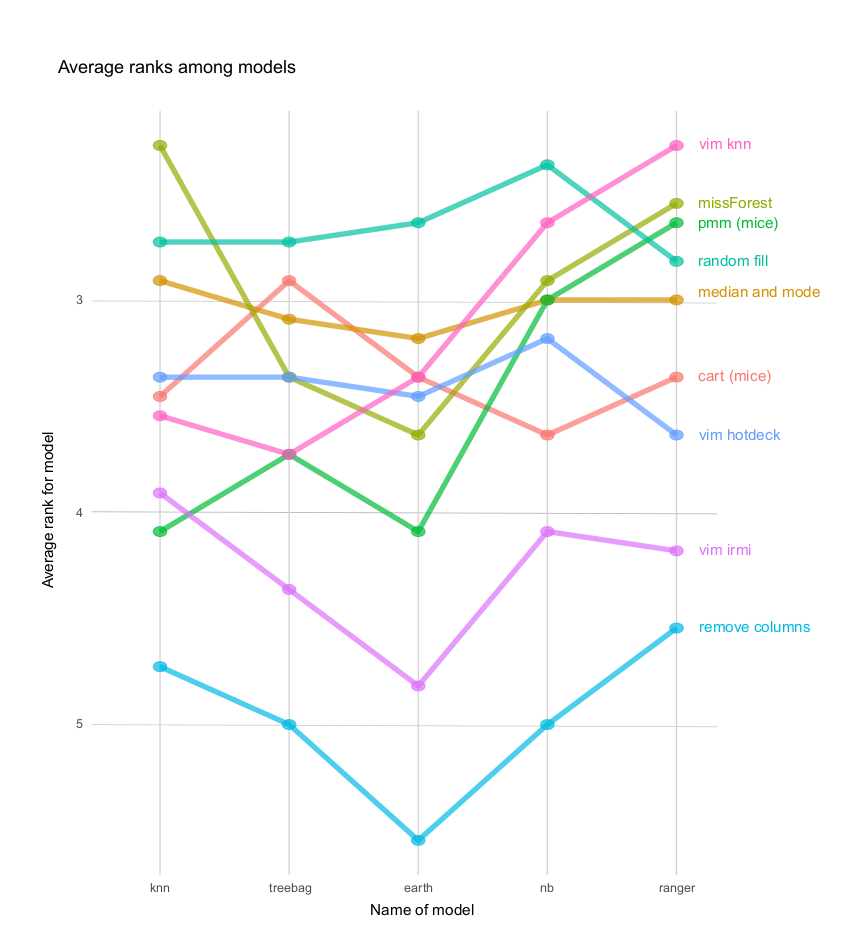

Visualization in Figure 2.2 shows the mean rank of imputation methods for each model. Ranks are a great way to visualize how effective the imputation really is. They are not sensitive to the dataset, the best rank will always be one, unlike some performance measure that might be significantly different between datasets. The average was measured on 11 datasets. The higher the rank, the better. Some trends and stability of methods are visible.

FIGURE 2.2: Average rank plot

Random fill is the best method. It was the best for three models and the second-best for one model. Median and mode and missForest were also really good for all models. The worst without a doubt is removing columns. Some methods are really good for certain models but very bad for others (for example pmm (mice)) in contrary to random fill and median and mode which were equally good for all models but not the best for knn and ranger.

2.1.5.1.3 Similarity of imputations

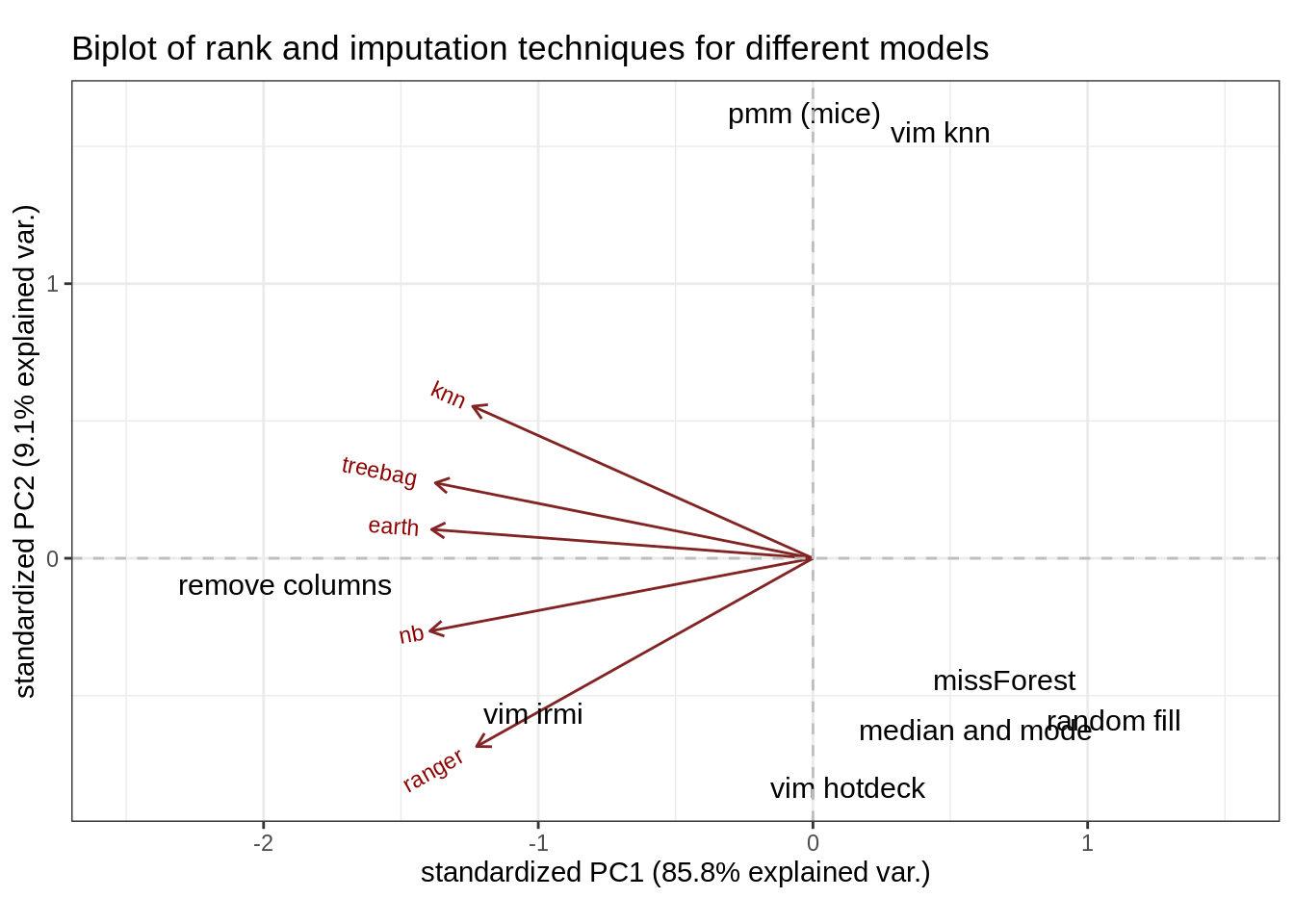

To see how similar are imputations to each other we used biplot. Biplot is using PCA to reduce the dimensionality of the data in our case of results. If imputation names are close to each other, it means that they produce similar results. The closer the arrows are to each other the more metrics values are correlated. Data came from our imputation results for all models without NA values. Then for each imputation data was averaged and processed by PCA (see Figure 2.3).

FIGURE 2.3: Rank biplot

Some imputation techniques seem to be distant from others. Mainly VIM’s irmi, remove columns, pmm, and knn. Judging by the red arrows (loadings plot), there are similarities in ranks among treebag and earth models. Knn and ranger are not very correlated with ranks achieved by them.

2.1.5.2 Imputation time

In imputation not only results matter. While having small dataset imputation time is not something worth considering. But when having tens or hundreds of thousands of observations some more complex imputations might take a really long time. In this case, maximum samples in data are 5000 but differences are already visible. This experiment was repeated 10 times to ensure higher reliability of data.

2.1.5.2.1 Mean time

| Imputation name | Time |

|---|---|

| remove columns | 0.0140 |

| random fill | 0.0154 |

| median and mode | 0.0272 |

| vim hotdeck | 0.2484 |

| vim knn | 1.6833 |

| pmm (mice) | 8.1867 |

| missForest | 8.9694 |

| vim irmi | 10.1300 |

| cart (mice) | 20.0550 |

As one might predict, imputation with mean and mode removing columns or filling NA’s with random value are the fastest methods (see Table 2.5). Imputations from the VIM package also have small computation time. Imputing with missForest package or using predictive mean matching in mice package is more than 1000 times slower, whilst using cart instead of pmm in mice package additionally increases computation time more than threefold.

2.1.5.2.2 Distribution of time

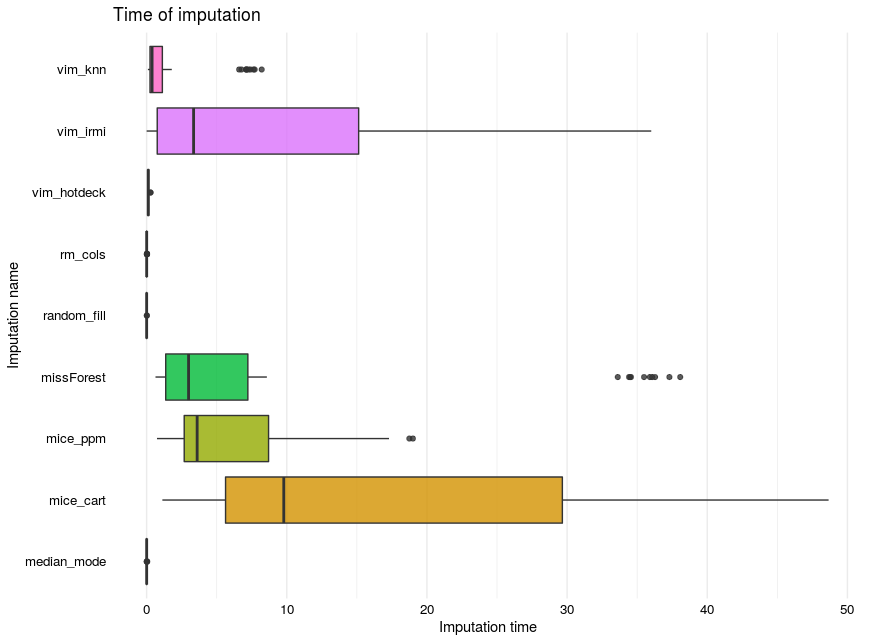

FIGURE 2.4: Time distribution

Imputation time is shown in the shape of boxplots (see Figure 2.4). A clear distinction between mice, missForest, and VIM’ irmi packages functions which are significantly slower than other methods. On contrary here imputation time was taken into consideration even when NA’s were produced.

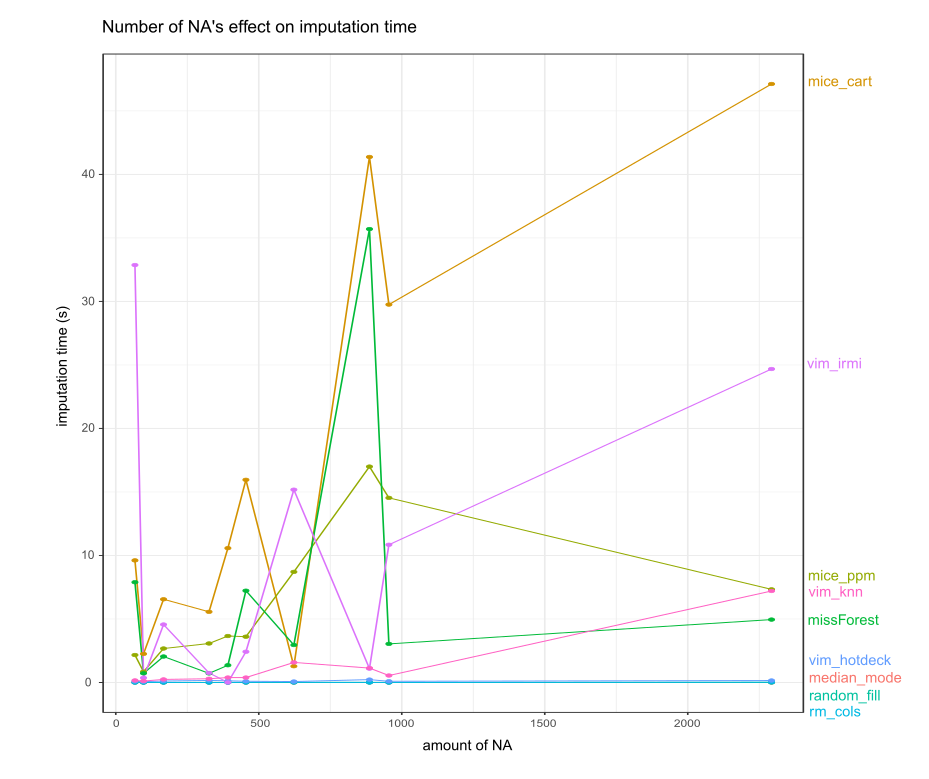

2.1.5.2.3 Influence of amount of NA on time

FIGURE 2.5: Number of NA and imputation time

The time needed to process NA’s is very volatile (see Figure 2.5). For simple imputation methods and VIM’s hotdeck time needed compared to the rest of the imputations seems to be constant. This plot was achieved by getting information from datasets and imputing ten times. Then the median was taken from times of dataset imputation. Fluctuations might be the effect of different data types, a number of factors, continuous variables distribution.

2.1.6 Summary and conclusions

Our experiments have unexpected results - it turns out that using a naive strategy of imputing with random values resulted in models with the best performance. The reason for the poor performance of more complex imputation methods might be the fact that we used default values of hyperparameters and some tuning may be required to show the full potential of these methods. Some datasets were simple and small therefore potential imputation leverage could be insignificant. What we did prove was that simple imputation methods might still be valuable despite their apparent flaws. What was significant was the big overhead that simple imputations had in terms of results achieved in time. While having a big dataset with a limited number of missing values they might be the best solution because some methods use whole data to train it’s missing value imputation.

We might not ignore huge computational overhead that is the result of using complex computing methods. The point of this paper was to test those methods for inexperienced users and find the most valuable one for them. As for now considering both performance and time the best for most models are random fill, median and mode. They achieve more than satisfying results, are reliable and work for every dataset. Judging by the results it is better to use simple imputation methods than more complex methods with default parameters from various packages.

References

Bischl, Bernd, Giuseppe Casalicchio, Matthias Feurer, Frank Hutter, Michel Lang, Rafael Mantovani, Jan van Rijn, and Joaquin Vanschoren. 2017. “OpenML Benchmarking Suites and the Openml100,” August.

Jadhav, Anil. 2020. “A Novel Weighted Tpr-Tnr Measure to Assess Performance of the Classifiers.” Expert Systems with Applications 152 (March): 113391. https://doi.org/10.1016/j.eswa.2020.113391.

Kowarik, Alexander, and Matthias Templ. 2016a. “Imputation with the R Package VIM.” Journal of Statistical Software 74 (7): 1–16. https://doi.org/10.18637/jss.v074.i07.

Kuhn, Max. 2008. “Building Predictive Models in R Using the Caret Package.” Journal of Statistical Software, Articles 28 (5): 1–26. https://doi.org/10.18637/jss.v028.i05.

Little, R. J. A., and D. B. Rubin. 2002. Statistical Analysis with Missing Data. Wiley Series in Probability and Mathematical Statistics. Probability and Mathematical Statistics. Wiley. http://books.google.com/books?id=aYPwAAAAMAAJ.

Stekhoven, Daniel J., and Peter Buehlmann. 2012a. “MissForest - Non-Parametric Missing Value Imputation for Mixed-Type Data.” Bioinformatics 28 (1): 112–18.

van Buuren, Stef, and Karin Groothuis-Oudshoorn. 2011a. “mice: Multivariate Imputation by Chained Equations in R.” Journal of Statistical Software 45 (3): 1–67. https://www.jstatsoft.org/v45/i03/.